Deep Learning Engine API

This document details the API for building Learning Engines for Wealth-Lab's Deep Learning Extension. A Learning Engine produces a Model that accept Inputs (in the form of WL8 indicators), and desired Outputs (also WL8 indicators.) The Outputs are typically future data, such as percentage return 5 bars in the future. The Model trains on the data through a number of cycles called Epochs. Throughout the training process, the Model evaluates its performance on out-of-sample data, reporting both the in and out-of-sample performance back to Deep Learning.

Build Environment

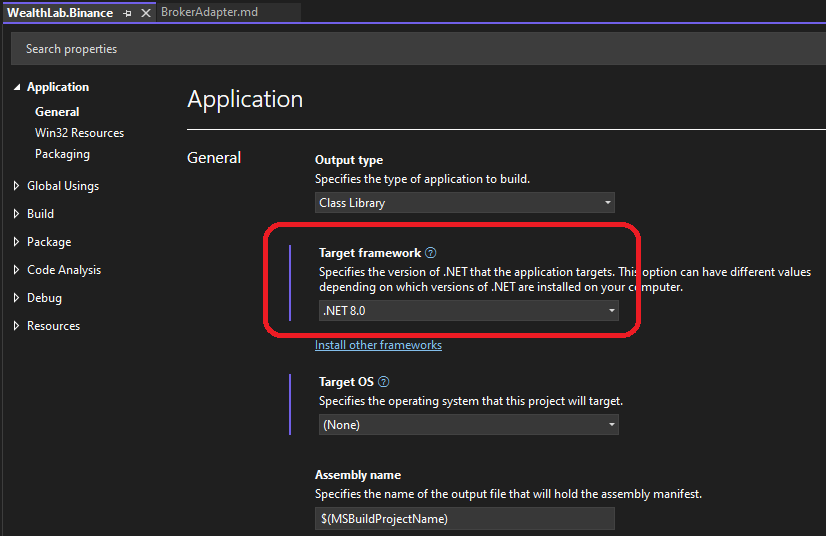

You can create a Learning Engine in a .NET development tool such as Visual Studio 2022.

Create a class library project that targets .NET8, then reference the WealthLab.DeepLearning library DLL that you'll find in the WL8 installation folder.

Note: If you are using Visual Studio 2022, it will need to be updated to at least version 17.8.6 to use .NET8.

Your Learning Engine will be a class in this library that descends from LearningEngineBase, which is defined in the WealthLab.DeepLearning library, in the WealthLab.DeepLearning namespace. After you implement and build your library, simply copy the resulting assembly DLL into the WL8 installation folder. The next time WL8 starts up, it will discover your Learning Engine, making it available in appropriate locations of the WL8 user interface.

Accessing the Host (WL8) Environment

The IHost interface allows your extension to access information about the user's WealthLab environment. For example, the location of the user data folder, or obtaining a list of DataSets defined by the user. At any point in your extension's code base, you can access an instance of the IHost interface using the singleton class WLHost and its Instance property. Example:

//get user data folder

string folder = WLHost.Instance.DataFolder;

Descriptive Properties

Override the following properties that provide descriptive details about your Learning Engine.

public abstract string Name

Return a descriptive name for your Learning Engine that will be used throughout Deep Learning.

public virtual string Description

Return a brief description of your Learning Engine. This is displayed when the user selects or configures your Learning Engine within the Deep Learning extension.

public virtual string URL

Return a URL that points to a web site offering more details about the library or package used to implement your Learning Engine.

public virtual string GlyphResource

Return a string that references an image file in your project that will be used as the Learning Engine's icon in Deep Learning. An image type of PNG is recommended, with a size of 24x24 pixels. The file should be saved in your project with a build attribute of Embedded Resource. Use a period delimited string and include your primary project name and any sub-folders. For example, the Encog Learning Engine in Deep Learning returns the following string:

public override string GlyphResource => "WealthLab.DeepLearning.Glyphs.Encog.png";

public virtual bool DisableGlyphReverse

By default, WL8 will apply a negative reversal of your glyph image in the Dark Theme. Override this to return true to disable that reversal.

Learning Engine Parameters

public ParameterList Parameters

public virtual void GenerateParameters()

Each Learning Engine can expose its own set of parameters that are configurable on a Model-by-Model basis. Since the LearningEngineBase base class is derived from Configurable, it inherits Configurable's Parameters property, and its GenerateParameters method. Override GenerateParameters to add Parameter instances to the Parameters property. These appear in Deep Learning's "Parameters" page.

Hidden Layers

public virtual ParameterList HiddenLayerParameters => null;

Some Learning Engines allow the addition and customization of Hidden Layers that exist between the Input Layer and the Output Layer. if your Learning Engine supports Hidden Layers, override the HiddenLayerParameters property to return a ParameterList that contains the Parameters a user can configure for each Hidden Layer.

Initialization

public virtual void Initialize()

Override Initialize to perform one time initialization required by the Learning Engine.

public virtual void InitializeNewModel(Model mdl)

The default implementation adds one Input set to RSI(4) with a Depth of 5 to the Model, one Hidden Layer (assuming the Learning Engine supports Hidden Layers) and one Output set to ROC(4) with a 4 bar Look Ahead.

If your Learning Engine would be better served with a different initial Model state, override InitializeNewModel and provide your own implementation. You can create instances of the InputOutputNode class and add them to the Model (mdl object's) Inputs and Outputs property. Below is the code for the default implementation.

public virtual void InitializeNewModel(Model mdl)

{

InputOutputNode inputNode = new InputOutputNode(true); //true=Input

inputNode.Depth = 5;

mdl.InputNodes.Add(inputNode);

InputOutputNode outputNode = new InputOutputNode(false); //false=Output

outputNode.Shift = 4;

outputNode.Parameters[1].Value = 4;

mdl.OutputNodes.Add(outputNode);

if (SupportsHiddenLayers)

{

//Use Clone to give each Hidden Layers its own Parameters instance

ParameterList hiddenLayer = HiddenLayerParameters.Clone();

mdl.HiddenLayers.Add(hiddenLayer);

}

}

Data Normalization

public virtual bool RequiresNormalization => true;

Many Learning Engine require Input data to be normalized before training. If your Learning Engine is an exception, or a conditional exception based on Parameters settings, override RequiresNormalization and return false when appropriate.

Assuming normalization is enabled, there are two types of normalization built-into Deep Learning, with the option of coding your own. Each normalization method is configurable separately for Inputs and Outputs.

public virtual bool UseStdDevNormInput => false;

public virtual bool UseStdDevNormOutput => false;

Override these properties to return true for cases where your Learning Engine should use standard deviation unit variance normalization. This scales the data so it has a standard deviation of 1 and is used by many types of neural network activation functions.

public virtual double InputNormMin => -1.0;

public virtual double InputNormMax => 1.0;

public double InputNormRange => InputNormMax - InputNormMin;

public virtual double OutputNormMin => -1.0;

public virtual double OutputNormMax => 1.0;

public virtual double OutputNormRange => OutputNormMax - OutputNormMin;

If standard deviation unit variance normalization is not used, normalization will default to a fixed range, where data is transformed to fall between a defined minimum and maximum value.

public virtual void Normalize(List<double> vals, InputOutputNode node)

public virtual void DeNormalize(List<double> vals, InputOutputNode node)

If either of these two normalization methods are sufficient, you can override the Normalize and DeNormalize methods to implement your own normalizing routine. Given the List<double> values in the vals parameter, calculate the appropriate normalization and overwrite the values in the vals List.

Training

public abstract void CreateNetwork(Model model)

Deep Learning calls CreateNetwork when it needs the Learning Engine to create its internal objects to support the training and/or prediction of the Model specified in the model parameter.

public abstract bool ReadyForPrediction { get; }

Override this property to return true if the internal objects required by your Learning Engine have been created. This will either have occurred via a call to CreateNetwork of LoadState.

public abstract void SetupTraining(Model model)

Deep Learning calls SetupTraining when a new session of training is commencing for the Model specified in the model parameter. You should at this stage convert the training data supplied in the Model instance into a form that is suitable for use in your Learning Engine's native framework. The following properties of the Model class contain the relevant data. All data in these lists are already normalized.

- PreparedInSampleInputs

- PreparedInSampleOutputs

- PreparedOutOfSampleInputs

- PreparedOutOfSampleOutputs

Each of these properties is a List<List<double>>. The outer Lists correspond to the Inputs and Outputs in the Model's architecture. The inner Lists contain the actual values. For example, if the Model has two Inputs with 1,000 bars of history in in-sample data, the PreparedInSampleInputs would contain two Lists. Each of these two Lists would contain a List<double> containing the 1,000 values of the corresponding Input.

public abstract (double, double) TrainEpoch(Model model)

Deep Learning calls TrainEpoch when a single Epoch of training should be conducted in the Model specified in the model parameter. Your training should perform at least the following 2 steps:

- Perform a round of training using the PreparedInSampleInputs of the Model, generating predicted outputs, and compare these with the PreparedInSampleOutputs. The error/loss/deviation from the actual outputs should be assigned to the first item of the Tuple return value.

- Perform an evaluation of the PreparedOutOfSampleInputs, generating predicted outputs, and compare these with PreparedOutOfSampleOutputs. An error/loss/deviation value should be assigned to the second item of the Tuple return value.

public abstract void FinishTraining(Model model)

Deep Learning calls FinishTraining when a training session has completed. You can perform any necessary clean up tasks here.

Data Batching

Some Learning Engines require Input data to be batched if it exceeds a certain threshold. If you need to batch the Inputs, you can use the BatchedInputsOutputs utility class to facilitate.

Persisting Model State

public abstract void SaveState(Model mdl, string fileName)

Override SaveState to persist the internal state of the Model in the mdl parameter to a file with the specified fileName. For a neural network, you'll be saving the various weights and biases that represent the connections between nodes. If you are adapting a third-party neural network or learning library, it should possess methods allowing you to persist and load a model's internal state.

Here is an example of the SaveState implementation for the Encog Learning Engine:

//save weights

public override void SaveState(Model model, string fileName)

{

if (_nn == null) //Encog BasicNetwork instance

return;

lock (_fileLock)

{

FileInfo fi = new FileInfo(fileName);

EncogDirectoryPersistence.SaveObject(fi, _nn);

}

}

public abstract void LoadState(Model mdl, string fileName);

Override LoadState to load a persisted state of a Model saved to fileName into the Model instance in the mdl parameter. Your implementation should ensure that the internal objects required by your Learning Engine to support prediction are created.

Here is the accompanying example for Encog:

//load NN with weights

public override void LoadState(Model model, string fileName)

{

lock (_fileLock)

{

if (!File.Exists(fileName))

return;

FileInfo fi = new FileInfo(fileName);

_nn = EncogDirectoryPersistence.LoadObject(fi) as BasicNetwork;

}

}

Prediction

public abstract List<List<double>> Predict(Model mdl, List<List<double>> inputs);

Override Predict to predict Outputs for the Model specified in the mdl parameter on the Input data specified in the inputs parameter. The format of the data is described in SetupTraining above. You should return the predicted output in a List of List<double> containing the values for each Output of the Model mdl.